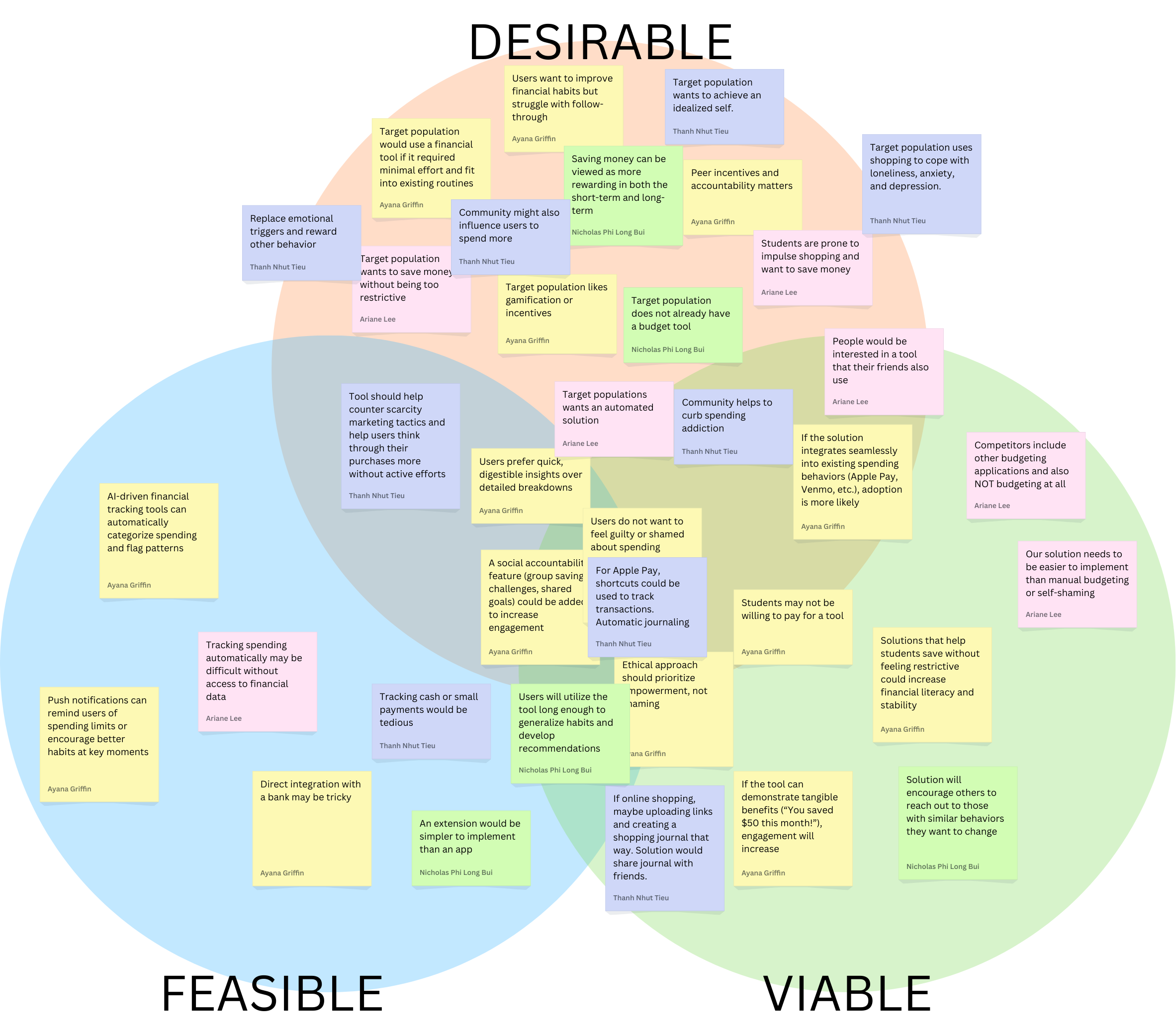

Our assumption map revealed nuances in how people think about their spending habits and their desire to become more mindful consumers. We observed various assumptions about who struggles most with impulse purchases, what motivates people to track their spending, preferred alternative behaviors to impulsive buying, and what features would actually help them pause before purchases. Through our discussions, we identified key uncertainties around how people want to interact with spending controls – particularly the balance between gentle nudges and firm restrictions. Some assumptions evolved into more specific questions, like whether users prefer real-time spending alerts or daily summaries, and if social comparison features help or hinder financial mindfulness.

These refined assumptions either highlighted critical unknowns we need to test, or gave us concrete hypotheses about which approaches might best support lasting behavior change in spending habits.

We chose to test the following key assumptions:

- Assumption: Users prefer quick, digestible insights over detailed breakdowns

- Potential Test: We can conduct a simple A/B preference test. We’ll create two mockups: Version A, a one-sentence spending summary with a visual trend indicator, and Version B, a detailed category breakdown with a pie chart or table. We’ll then show both versions to students and ask which format they prefer, which feels more useful, and which they would check regularly. If most users prefer Version A, this supports the assumption that quick insights are more engaging. If responses are mixed, a hybrid approach (quick insights with an option to expand into details) might be more effective.

- Assumption: Target population would use a financial tool if it required minimal effort and fit into existing routines

- Potential Test: We can present solutions from our comparative research according to the manual -> automatic scale and ask users to review the solutions, and rank which ones they’d use. After ranking, we’ll ask why they chose their top option and what would make them consider a lower-ranked option. This test will reveal which level of automation is most desirable and whether minimal effort leads to higher usability and adoption. If the highly automated tool is ranked highest, then our assumption is correct.

- Assumption: A social accountability feature (group savings challenges, shared goals) could be added to increase engagement

- Potential Test: We can conduct a preference test. We’ll present two options: (1) a solo savings goal, where users track progress privately, and (2) a group savings challenge, where friends set a shared goal and see each other’s progress. We’ll ask which option feels more motivating, whether they would join a challenge, and if public tracking would encourage or discourage them. If most prefer the group feature, this supports the assumption that social accountability boosts engagement. If responses vary, adding optional privacy settings or low-pressure challenges might be necessary. To further validate, we can run a social media poll or prototype a basic challenge feature to measure real engagement.

Comments

Comments are closed.